Today D-Wave Systems announced details of its most advanced quantum computing system, featuring a new 2000-qubit processor. The announcement is being made at the company’s inaugural users group conference in Santa Fe, New Mexico. The new processor doubles the number of qubits over the previous generation D-Wave 2X system, enabling larger problems to be solved and extending D-Wave’s significant lead over all quantum computing competitors. The new system also introduces control features that allow users to tune the quantum computational process to solve problems faster and find more diverse solutions when they exist. In early tests these new features have yielded performance improvements of up to 1000 times over the D-Wave 2X system.

Archives for September 2016

ARM Releases CoreLink Interconnect

“The demands of cloud-based business models require service providers to pack more efficient computational capability into their infrastructure,” said Monika Biddulph, general manager, systems and software group, ARM. “Our new CoreLink system IP for SoCs, based on the ARMv8-A architecture, delivers the flexibility to seamlessly integrate heterogeneous computing and acceleration to achieve the best balance of compute density and workload optimization within fixed power and space constraints.”

Video: How ORNL is Bridging the Gap between Computing and Facilities

“Starting in 2015, Oak Ridge National Laboratory partnered with the University of Tennessee to offer a minor-degree program in data center technology and management, one of the first offerings of its kind in the country. ORNL staff members developed the senior-level course in collaboration with UT College of Engineering professor Mark Dean after an ORNL strategic partner identified a need for employees who could bridge both the facilities and operational aspects of running a data center. In addition to developing the course curriculum, ORNL staff members are also serving as guest lecturers.”

Baidu Research Announces DeepBench Benchmark for Deep Learning

“Deep learning developers and researchers want to train neural networks as fast as possible. Right now we are limited by computing performance,” said Dr. Diamos. “The first step in improving performance is to measure it, so we created DeepBench and are opening it up to the deep learning community. We believe that tracking performance on different hardware platforms will help processor designers better optimize their hardware for deep learning applications.”

Register Now for GPU Mini-Hackathon at ORNL Nov. 1-3

Oak Ridge National Lab is hosting a 3-day GPU Mini-hackathon led by experts from the OLCF and Nvidia. The event takes place Nov. 1-3 in Knoxville, Tennessee. “General-purpose Graphics Processing Units (GPGPUs) potentially offer exceptionally high memory bandwidth and performance for a wide range of applications. The challenge in utilizing such accelerators has been the difficulty in programming them. This event will introduce you to GPU programming techniques.”

Earlham Institute Tests Green HPC from Verne Global in Iceland

“As more organizations turn to high performance computing to process large data sets, demand is growing for scalable and secure data centre solutions. The source, availability and reliability of the power grid infrastructure is becoming a critical factor in a data centre site selection decision,” said Jeff Monroe, CEO at Verne Global. “Verne Global is able to deliver EI a forward-thinking path for growth with a solution that combines unparalleled costs savings with operational efficiencies to support their data-intensive research.”

University of Tokyo to Deploy IME14K Burst Buffer on Reedbush Supercomputer

Today DDN Japan announced that the University of Tokyo and the Joint Center for Advanced High Performance Computing (JCAHPC) has selected DDN’s burst buffer solution “IME14K” for their new Reedbush supercomputer. “Many problems in science and research today are located at the intersections of HPC and Big Data, and storage and I/O are increasingly important components of any large compute infrastructure.”

Radio Free HPC Looks for the Forever Data Format

In this podcast, the Radio Free HPC team discuss Henry Newman’s recent editorial calling for a self-descriptive data format that will stand the test of time. Henry contends that we seem headed for massive data loss unless we act.

Quantum Powers Petascale Storage at Two Major European Research Institutions

Today Quantum Corp. announced that two of Europe’s premier research institutions are using the company’s StorNext workflow storage as the foundation for managing their growing data and enabling a range of scientific initiatives. “With the StorNext platform, we have removed barriers to research,” Thomas Disper, CISO and Head of IT, Max Planck Institute for Chemistry. “It allows us to provide a lot more capacity quickly and easily. We don’t need to give research teams data limits, and storage for new projects can be ready in an afternoon.”

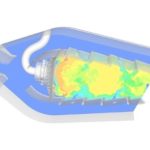

SGI and ANSYS Achieve New World Record in HPC

Over at the ANSYS Blog, Tony DeVarco writes that the company worked with SGI to break a world record for HPC scalability. “Breaking last year’s 129,024 core record by more than 16,000 cores, SGI was able to run the ANSYS provided 830 million cell gas combustor model from 1,296 to 145,152 CPU cores.This reduces the total solver wall clock time to run a single simulation from 20 minutes for 1,296 cores to a mere 13 seconds using 145,152 cores and achieving an overall scaling efficiency of 83%.”