Google today introduced the Accelerator-Optimized VM (A2) instance family on Google Compute Engine based on the NVIDIA Ampere A100 Tensor Core GPU, launched in mid-May. Available in alpha and with up to 16 GPUs, A2 VMs are the first A100-based offering in a public cloud, according to Google. At its launch, Nvidia said the A100, built on the company’s new Ampere architecture, delivers “the greatest generational leap ever,” according to Nvidia, enhancing training and inference computing performance by 20x over its predecessors.

Articles and news on parallel programming and code modernization

SeRC Turns to oneAPI Multi-Chip Programming Model for Accelerated Research

At ISC 2020 Digital, the Swedish e-Science Research Center (SeRC), Stockholm, has announced plans to use Intel’s oneAPI unified programming language by researchers conducting massive simulations powered by CPUs and GPUs. The center said it chose the oneAPI programming model, designed to span CPUs, GPUs, FPGAs and other architectures and silicon, to accelerate compute for research using GROMACS (GROningen MAchine for Chemical Simulations) molecular dynamics software, developed by SeRC and first released in 1991

‘Rocky Year’ – Hyperion’s HPC Market Update: COVID-19 Hits Q1 Revenues, Cloud HPC Boom, Shift in Server Vendor Standings

Instead of its usual mid-year HPC market update presented at the ISC conference in Frankfurt, industry analyst firm Hyperion Research has virtually released its latest findings – including estimates of COVID-19 ‘s impact on the industry, on growth of HPC in public clouds and a significant shift in the competitive standing among the leading HPC server vendors. Taking 2019 in total, Hyperion sized the HPC server market at $13.7 billion, record revenues

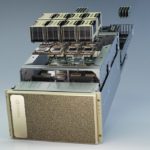

New NVIDIA DGX A100 Packs Record 5 Petaflops of AI Performance for Training, Inference, and Data Analytics

Today NVIDIA unveiled the NVIDIA DGX A100 AI system, delivering 5 petaflops of AI performance and consolidating the power and capabilities of an entire data center into a single flexible platform. “DGX A100 systems integrate eight of the new NVIDIA A100 Tensor Core GPUs, providing 320GB of memory for training the largest AI datasets, and the latest high-speed NVIDIA Mellanox HDR 200Gbps interconnects.”

Podcast: A Shift to Modern C++ Programming Models

In this Code Together podcast, Alice Chan from Intel and Hal Finkel from Argonne National Lab discuss how the industry is uniting to address the need for programming portability and performance across diverse architectures, particularly important with the rise of data-intensive workloads like artificial intelligence and machine learning. “We discuss the important shift to modern C++ programming models, and how the cross-industry oneAPI initiative, and DPC++, bring much-needed portable performance to today’s developers.”

Video: Profiling Python Workloads with Intel VTune Amplifier

Paulius Velesko from Intel gave this talk at the ALCF Many-Core Developer Sessions. “This talk covers efficient profiling techniques that can help to dramatically improve the performance of code by identifying CPU and memory bottlenecks. Efficient profiling techniques can help dramatically improve the performance of code by identifying CPU and memory bottlenecks. We will demonstrate how to profile a Python application using Intel VTune Amplifier, a full-featured profiling tool.”

Breaking Boundaries with Data Parallel C++

“There’s a new programming language in town. Called Data Parallel C++ (DPC++), it allows developers to reuse code across diverse hardware targets—CPUs and accelerators—and perform custom tuning for a specific accelerator. DPC++ is part of oneAPI—an Intel-led initiative to create a unified programming model for cross-architecture development. Based on familiar C++ and SYCL, DPC++ is an open alternative to single-architecture proprietary approaches and helps developers create solutions that better meet specialized workload requirements.”

New Intel oneAPI DevCloud makes it easier for coders working from home

Today Intel introduced the oneAPI DevCloud to make it easier and more productive for coders currently working from home. “Developing code at home requires access to compute cycles, the latest software development tools, access across diverse hardware architectures—CPUs, GPUs, and FPGAs, and expanded storage capabilities. Through the new oneAPI DevCloud, Intel aims to provide extended access, capacity and support for oneAPI developers working from home.”

Software-defined Microarchitecture: An Arguably Terrible Idea, But Certainly Not The Worst Idea

James Mickens from Harvard University gave this talk at HiPEAC 2020. “In this presentation, I will describe some of the benefits that would emerge from a new kind of processor that aggressively exposes microarchitectural state and allows it to be programmed. Using elaborate hand gestures and cheap pleas for sympathy, I will explain why my proposals are different than prior “open microarchitecture” ideas like transport-triggered designs.”

Podcast: One Big Debate over OneAPI

In this podcast, the Radio Free HPC team looks at Intel’s oneAPI project. “The OneAPI project is a highly ambitious initiative; trying to design a single API to handle CPUs, GPUs, FPGAs, and other types of processors. In the discussion, we look under the hood and see how this might work. One thing working in Intel’s favor is that they’re using data parallel C++, which is highly compatible with CUDA – and which is probably Intel’s target with this new initiative.”