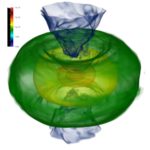

Using the Titan supercomputer at Oak Ridge National Laboratory, a team of astrophysicists created a set of galactic wind simulations of the highest resolution ever performed. The simulations will allow researchers to gather and interpret more accurate, detailed data that elucidates how galactic winds affect the formation and evolution of galaxies.

Supercomputing Neutron Star Structures and Mergers

Over at XSEDE, Kimberly Mann Bruch & Jan Zverina from the San Diego Supercomputer Center write that researchers are using supercomputers to create detailed simulations of neutron star structures and mergers to better understand gravitational waves, which were detected for the first time in 2015. “XSEDE resources significantly accelerated our scientific output,” noted Paschalidis, whose group has been using XSEDE for well over a decade, when they were students or post-doctoral researchers. “If I were to put a number on it, I would say that using XSEDE accelerated our research by a factor of three or more, compared to using local resources alone.”

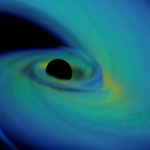

When Neutron Stars and Black Holes Collide

Working with an international team, scientists at Berkeley Lab have developed new computer models to explore what happens when a black hole joins with a neutron star – the superdense remnant of an exploded star. “If we can follow up LIGO detections with telescopes and catch a radioactive glow, we may finally witness the birthplace of the heaviest elements in the universe,” he said. “That would answer one of the longest-standing questions in astrophysics.”

Supercomputing the Black Hole Speed Limit

Supermassive black holes have a speed limit that governs how fast and how large they can grow,” said Joseph Smidt of the Theoretical Design Division at LANL. Using computer codes developed at Los Alamos for modeling the interaction of matter and radiation related to the Lab’s stockpile stewardship mission, Smidt and colleagues created a simulation of collapsing stars that resulted in supermassive black holes forming in less time than expected, cosmologically speaking, in the first billion years of the universe.

Supercomputing and the Search for Planet 9

Still unobserved, Planet 9 is within the gravitational influence of our sun, completing one revolution in approximately 20,000 years. That means its orbital clock runs so slowly that it has not been around the sun since our last ice age. “The work could not have been completed without the heavy lifting provide by supercomputers at California Institute of Technology (Caltech). There, Batygin employed the Fram supercomputer at CITerra for four months to simulate four billion years of solar system evolution. Fram consists of almost 4,000 cores, running on the NSF-funded Rocks software environment, with 512TB of Lustre file storage.”

Sandia’s Z machine Helps Pinpoint Age of Saturn

How old is Saturn? Researchers are using Sandia’s Z machine to find out.

Nobel Lecture Series: Data, Computation and the Fate of the Universe

In this video from the NERSC Nobel Lecture Series: Saul Perlmutter from Lawrence Berkeley National Lab presents “Data, Computation and the Fate of the Universe.” Perlmuitter won the 2011 Nobel Prize in Physics. As part of our feature, we’ve included an insideHPC interview with Saul Perlmutter from SC13.

Algorithmic and Software Challenges For Numerical Libraries at Exascale

In this video from the Exascale Computing in Astrophysics Conference in Switzerland, Jack Dongarra from the University of Tennessee presents: Algorithmic and Software Challenges For Numerical Libraries at Exascale.

Interview: Universe has Some Surprises in Store, Says Nobel Laureate Saul Perlmutter

In this special feature, John Kirkley sits down with Dr. Saul Perlmutter. A Nobel prize winning astrophysicist, Dr. Perlmutter’s talk at SC13 focused on how integrating big data — and careful analysis — led to the discovery of the acceleration of the universe’s expansion.