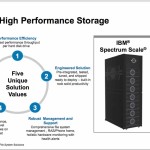

[SPONSORED CONTENT] The past few years have brought many changes into the HPC storage world, both with technology like non-volatile memory express (NVMe) or persistent memory, and the growth of software defined storage solutions. Gone are the days when IBM Spectrum Scale (secretly, we know we all still call it GPFS) or Lustre were the only real choices in the market. In retrospect, the choice was easy: you picked one of the two and away you went. And nobody ever wondered if they made the right choice.

Enabling Oracle Cloud Infrastructure with IBM Spectrum Scale

In this video, Doug O’Flaherty from IBM describes how Spectrum Scale Storage (GPFS) helps Oracle Cloud Infrastructure delivers high performance for HPC applications. “To deliver insights, an organization’s underlying storage must support new-era big data and artificial intelligence workloads along with traditional applications while ensuring security, reliability and high performance. IBM Spectrum Scale meets these challenges as a high-performance solution for managing data at scale with the distinctive ability to perform archive and analytics in place.”

Video: The Marriage of Cloud, HPC and Containers

Adam Huffman from the Francis Crick Institute gave this talk at FOSDEM’17. “We will present experiences of supporting HPC/HTC workloads on private cloud resources, with ideas for how to do this better and description of trends for non-traditionalHPC resource provision. I will discuss my work as part of the Operations Team for the eMedLab private cloud, which is a large-scale (6000-core, 5PB)biomedical research cloud using HPC hardware, aiming to support HPC workloads.”

NEC’s Aurora Vector Engine & Advanced Storage Speed HPC & Machine Learning at ISC 2017

In this video from ISC 2017, Oliver Tennert from NEC Deutschland GmbH introduces the company’s advanced technologies for HPC and Machine Learning. “Today NEC Corporation announced that it has developed data processing technology that accelerates the execution of machine learning on vector computers by more than 50 times in comparison to Apache Spark technologies.”

Consolidating Storage for Scientific Computing

In this special guest feature from Scientific Computing World, Shailesh M Shenoy from the Albert Einstein College of Medicine in New York discusses the challenges faced by large medical research organizations in the face of ever-growing volumes of data. “In short, our challenge was that we needed the ability to collaborate within the institution and with colleagues at other institutes – we needed to maintain that fluid conversation that involves data, not just the hypotheses and methods.”

Video: Supercomputing at the University of Buffalo

In this WGRZ video, researchers describe supercomputing at the Center for Computational Research at the University of Buffalo. “The Center’s extensive computing facilities, which are housed in a state-of-the-art 4000 sq ft machine room, include a generally accessible (to all UB researchers) Linux cluster with more than 8000 processor cores and QDR Infiniband, a subset (32) of which contain (64) NVidia Tesla M2050 “Fermi” graphics processing units (GPUs).”

Lustre: This is Not Your Grandmother’s (or Grandfather’s) Parallel File System

“Over the last several years, an enormous amount of development effort has gone into Lustre to address users’ enterprise-related requests. Their work is not only keeping Lustre extremely fast (the Spider II storage system at the Oak Ridge Leadership Computing Facility (OLCF) that supports OLCF’s Titan supercomputer delivers 1 TB/s ; and Data Oasis, supporting the Comet supercomputer at the San Diego Supercomputing Center (SDSC) supports thousands of users with 300GB/s throughput) but also making it an enterprise-class parallel file system that has since been deployed for many mission-critical applications, such as seismic processing and analysis, regional climate and weather modeling, and banking.”

Video: General Atomics Delivers Data-Aware Cloud Storage Gateway with ArcaStream

“Ngenea’s blazingly-fast on-premises storage stores frequently accessed active data on the industry’s leading high performance file system, IBM Spectrum Scale (GPFS). Less frequently accessed data, including backup, archival data and data targeted to be shared globally, is directed to cloud storage based on predefined policies such as age, time of last access, frequency of access, project, subject, study or data source. Ngenea can direct data to specific cloud storage regions around the world to facilitate remote low latency data access and empower global collaboration.”

Slidecast: Seagate Beefs Up ClusterStor at SC15

In this video from SC15, Larry Jones from Seagate provides an overview of the company’s revamped HPC storage product line, including a new 10,000 RPM ClusterStor hard disk drive tailor-made for the HPC market. “ClusterStor integrates the latest in Big Data technologies to deliver class-leading ingest speeds, massively scalable capacities to more than 100PB and the ability to handle a variety of mixed workloads.”

World’s First Data-Aware Cloud Storage Gateway Coming to SC15

Today ArcaStream and General Atomics introduced Ngenea, the world’s first data-aware cloud storage gateway. Ready for quick deployment, Ngenea seamlessly integrates with popular cloud and object storage providers such as Amazon S3, Google GCS, Scality, Cleversafe and Swift and is optimized for data-intensive workflows in life science, education, research, and oil and gas exploration.