NERSC has signed a contract with NVIDIA to enhance GPU compiler capabilities for Berkeley Lab’s next-generation Perlmutter supercomputer. “We are excited to work with NVIDIA to enable OpenMP GPU computing using their PGI compilers,” said Nick Wright, the Perlmutter chief architect. “Many NERSC users are already successfully using the OpenMP API to target the manycore architecture of the NERSC Cori supercomputer. This project provides a continuation of our support of OpenMP and offers an attractive method to use the GPUs in the Perlmutter supercomputer. We are confident that our investment in OpenMP will help NERSC users meet their application performance portability goals.”

Search Results for: mpi

Intel becomes First Corporate Champion for Women in HPC

Today the Women in High Performance Computing (WHPC) organization announced that Intel Corporation has become its first Corporate Champion. “Women represent less than 15% of the HPC sector; it is essential that we continue to work at the grassroots level and globally to grow the HPC talent pool and benefit from the diversity of talented women and other under-represented groups. With support from Intel, we can begin focusing on global programs and initiatives that can accelerate our goals and amplify our impact.”

Video: Ramping up for Exascale at the National Labs

In this video from the Exascale Computing Project, Dave Montoya from LANL describes the continuous software integration effort at DOE facilities where exascale computers will be located sometime in the next 3-4 years. “A key aspect of the Exascale Computing Project’s continuous integration activities is ensuring that the software in development for exascale can efficiently be deployed at the facilities and that it properly blends with the facilities’ many software components. As is commonly understood in the realm of high-performance computing, integration is very challenging: both the hardware and software are complex, with a huge amount of dependencies, and creating the associated essential healthy software ecosystem requires abundant testing.”

Video: Hewlett Packard Labs teams with Mentor for LightSuite Photonic Compiler

“Mentor’s LightSuite Photonic Compiler represents a quantum leap in automating what has up to now been a highly manual, full-custom process that required deep knowledge of photonics as well as electronics,” said Joe Sawicki, vice president and general manager of the Design-to-Silicon Division at Mentor, a Siemens business. “With the new LightSuite Photonic Compiler, Mentor is enabling more companies to push the envelope in creating integrated photonic designs.”

Intel® Compilers Overview: Scalable Performance for Intel® Processors

Intel Compilers for C/C++ and Fortran empower developers to derive the greatest performance from applications and hardware. In this video, Igor Vorobtsov discusses nuances of Intel compiler features which enable high-level optimization, auto-parallelization, auto-vectorization, dynamic profile guided optimization, detailed optimization reports, inter-procedural optimization (IPO), and much more.

Podcast: Evolving MPI for Exascale Applications

In this episode of Let’s Talk Exascale, Pavan Balaji and Ken Raffenetti describe their efforts to help MPI, the de facto programming model for parallel computing, run as efficiently as possible on exascale systems. “We need to look at a lot of key technical challenges, like performance and scalability, when we go up to this scale of machines. Performance is one of the biggest things that people look at. Aspects with respect to heterogeneity become important.”

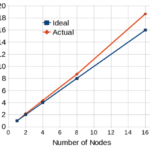

Maximizing Performance of HiFUN* CFD Solver on Intel® Xeon® Scalable Processor With Intel MPI Library

The HiFUN CFD solver shows that the latest-generation Intel Xeon Scalable processor enhances single-node performance due to the availability of large cache, higher core density per CPU, higher memory speed, and larger memory bandwidth. The higher core density improves intra-node parallel performance that permits users to build more compact clusters for a given number of processor cores. This permits the HiFUN solver to exploit better cache utilization that contributes to super-linear performance gained through the combination of a high-performance interconnect between nodes and the highly-optimized Intel® MPI Library.

Video: Intel to Bring AI Technology to future Olympic Games

Today Intel announced a partnership with the International Olympic Committee to use the company’s leading technology to enhance the Olympic Games through 2024. Intel will focus primarily on infusing its 5G platforms, VR, 3D and 360-degree content development platforms, artificial intelligence platforms, and drones, along with other silicon solutions to enhance the Olympic Games. “As a result of Olympic Agenda 2020, the IOC is forging groundbreaking partnerships, said IOC President Thomas Bach. “Intel is a world leader in its field, and we’re very excited to be working with the Intel team to drive the future of the Olympic Games through cutting-edge technology. The Olympic Games provide a connection between fans and athletes that has inspired people around the world through sport and the Olympic values of excellence, friendship and respect. Thanks to our new innovative global partnership with Intel, fans in the stadium, athletes and audiences around the world will soon experience the magic of the Olympic Games in completely new ways.”

NVIDIA Releases PGI 2018 Compilers and Tools

Today NVIDIA announced the availability of PGI 2018. “PGI is the default compiler on many of the world’s fastest computers including the Titan supercomputer at Oak Ridge National Laboratory. PGI production-quality compilers are for scientists and engineers using computing systems ranging from workstations to the fastest GPU-powered supercomputers.”

Podcast: Open MPI for Exascale

In this Let’s Talk Exascale podcast, David Bernholdt from ORNL discusses the Open MPI for Exascale project, which is focusing on the communication infrastructure of MPI, or message-passing interface, an extremely widely used standard for interprocessor communications for parallel computing. “It’s possible that even though applications may make millions or billions of short calls to the MPI library during the course of an execution, performance improvements can have a significant overall impact on the application runtime.”