Astronomers are using iRODs data technology to study the evolution of galaxies and the nature of dark matter. “By partnering with data specialists at UNC-Chapel Hill’s Renaissance Computing Institute (RENCI) who develop the integrated Rule-Oriented Data System (iRODS) researchers and students now have online databases for two large astronomical data sets: the REsolved Spectroscopy of a Local VolumE (RESOLVE) survey and the Environmental COntext (ECO) catalog.”

Video: How Argonne Simulated the Evolution of the Universe

In this Chigago Tonight video, Katrin Heitmann from Argonne National Lab describes one of the most complex simulations of the evolution of the universe ever created. “What we want to do now with these simulations is exactly create this universe in our lab. So we build this model and we put it on a computer and evolve it forward, and now we have created a universe that we can look at and compare it to the real data.”

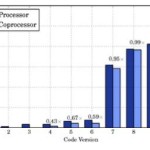

Cosmic Microwave Background Analysis with Intel Xeon Phi

“Modal is a cosmological statistical analysis package that can be optimized to take advantage of a high number of cores. The inner product computations with Modal can be run on the Intel Xeon Phi coprocessor. As a base, the entire simulation took about 6 hours on the Intel Xeon processor. Since the inner calculations are independent from each other, this lends to using the Intel Xeon Phi coprocessor.”

Video: High Performance Clustering for Trillion Particle Simulations

“Modern Cosmology and Plasma Physics codes are capable of simulating trillions of particles on petascale systems. Each time step generated from such simulations is on the order of 10s of TBs. Summarizing and analyzing raw particle data is challenging, and scientists often focus on density structures for follow-up analysis. We develop a highly scalable version of the clustering algorithm DBSCAN and apply it to the largest particle simulation datasets. Our system, called BD-CATDS, is the first one to perform end-to-end clustering analysis of trillion particle simulation output. We demonstrate clustering analysis of a 1.4 Trillion particle dataset from a plasma physics simulation, and a 10,240^3 particle cosmology simulation utilizing ~100,000 cores in 30 minutes. BD-CATS has enabled scientists to ask novel questions about acceleration mechanisms in particle physics, and has demonstrated qualitatively superior results in cosmology. Clustering is an example of one scientific data analytics problem. This talk will conclude with a broad overview of other leading data analytics challenges across scientific domains, and joint efforts between NERSC and Intel Research to tackle some of these challenges.”

Podcast: Supercomputing Black Hole Mergers

“General Relativity is celebrating this year a hundred years since its first publication in 1915, when Einstein introduced his theory of General Relativity, which has revolutionized in many ways the way we view our universe. For instance, the idea of a static Euclidean space, which had been assumed for centuries and the concept that gravity was viewed as a force changed. They were replaced with a very dynamical concept of now having a curved space-time in which space and time are related together in an intertwined way described by these very complex, but very beautiful equations.”

Researching Origins of the Universe at the Stephen Hawking Centre for Theoretical Cosmology

In this special guest feature, Linda Barney writes that researchers at the University of Cambridge are using an Intel Xeon Phi coprocessor-based supercomputer from SGI to accelerate discovery efforts. “We have managed to modernize and optimize the main workhorse code used in the research so it now runs at 1/100-1/1000 of the original runtime. This allows us to tackle problems which would have taken unfeasibly long to solve. Secondly, it has opened windows for previously unthinkable research, namely using the MODAL code in cosmological parameter search: this is a problem which is constantly being solved in an iterative process, but adding the MODAL results to the process has only become possible with the improved performance.”

Video: Supernova Cosmology with Python

In this video from PyData Seattle 2015, Rahul Biswas from the University of Washington presents: Supernova Cosmology with Python.

Video: Simulating Cosmic Structure Formation

“Numerical simulations on supercomputers play an ever more important role in astrophysics. They have become the tool of choice to predict the non-linear outcome of the initial conditions left behind by the Big Bang, providing crucial tests of cosmological theories. However, the problem of galaxy and star formation confronts us with a staggering multi-physics complexity and an enormous dynamic range that severely challenges existing numerical methods.”

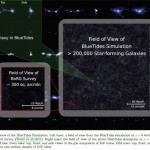

Video: First Galaxies and Quasars in the BlueTides Simulation

“BlueTides has successfully used essentially the entire set of XE6 nodes on the Blue Waters. It follows the evolution of 0.7 trillion particles in a large volume of the universe (600 co-moving Mpc on a side) over the first billion years of the universe’s evolution with a dynamic range of 6 (12) orders of magnitude in space (mass). This makes BlueTides by far the largest cosmological hydrodynamic simulation ever run.”

Hunting Supernovas with Supercomputers

Caltech researchers are using NERSC supercomputers to search for newly born supernovas. The details of their findings appear May 20 in an advance online issue of Nature.