In this video from the 2016 Argonne Training Program on Extreme-Scale Computing, Mark Miller from LLNL leads a panel discussion on Experiences in eXtreme Scale in HPC with FASTMATH team members. “The FASTMath SciDAC Institute is developing and deploying scalable mathematical algorithms and software tools for reliable simulation of complex physical phenomena and collaborating with U.S. Department of Energy (DOE) domain scientists to ensure the usefulness and applicability of our work. The focus of our work is strongly driven by the requirements of DOE application scientists who work extensively with mesh-based, continuum-level models or particle-based techniques.”

Video: Chapel – Productive, Multiresolution Parallel Programming

“Engineers at Cray noted that the HPC community was hungry for alternative parallel programming languages and developed Chapel as part of our response. The reaction from HPC users so far has been very encouraging—most would be excited to have the opportunity to use Chapel once it becomes production-grade.”

Paul Messina Presents: A Path to Capable Exascale Computing

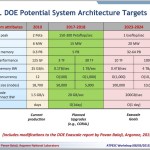

Paul Messina presented this talk at the 2016 Argonne Training Program on Extreme-Scale Computing. “The President’s NSCI initiative calls for the development of Exascale computing capabilities. The U.S. Department of Energy has been charged with carrying out that role in an initiative called the Exascale Computing Project (ECP). Messina has been tapped to lead the project, heading a team with representation from the six major participating DOE national laboratories: Argonne, Los Alamos, Lawrence Berkeley, Lawrence Livermore, Oak Ridge and Sandia. The project program office is located at Oak Ridge.

Apply Now for Supercomputing Summer School

The summer of 2016 will see a raft of summer schools and other initiatives to train more people in high-performance computing, including efforts to increase the diversity of HPC specialists with a specific program aimed at ethnic minorities. But interested students need to get their applications in now.

Apply Now for Argonne Training Program on Extreme-Scale Computing

Computational scientists now have the opportunity to apply for the upcoming Argonne Training Program on Extreme-Scale Computing (ATPESC), to take place from July 31-August 12, 2016. “With the challenges posed by the architecture and software environments of today’s most powerful supercomputers, and even greater complexity on the horizon from next-generation and exascale systems, there is a critical need for specialized, in-depth training for the computational scientists poised to facilitate breakthrough science and engineering using these amazing resources. This program provides intensive hands-on training on the key skills, approaches and tools to design, implement, and execute computational science and engineering applications on current supercomputers and the HPC systems of the future. As a bridge to that future, this two-week program to be held at the Pheasant Run Resort in suburban Chicago fills many gaps that exist in the training computational scientists typically receive through formal education or shorter courses.”

Video: Chapel – Productive, Multiresolution Parallel Programming

Brad Chamberlain from Cray presented this talk at the recent Argonne Training Program on Extreme-Scale Computing. “We believe Chapel can greatly improve productivity for current and emerging HPC architectures and meet emerging mainstream needs for parallelism and locality.”

Video: Debugging and Profiling Your HPC Applications

David Lecomber from Allinea Software presented this talk at the Argonne Training Program on Extreme-Scale Computing. “From climate modeling to astrophysics, from financial modeling to engine design, the power of clusters and supercomputers advances the frontiers of knowledge and delivers results for industry. Writing and deploying software that exploits that computing power is a demanding challenge – it needs to run fast, and run right. That’s where Allinea comes in.”

Bill Gropp Presents: MPI and Hybrid Programming Models

Bill Gropp presented this talk at the Argonne Training Program on Extreme-Scale Computing. “Where it is used as an alternative to MPI, OpenMP often has difficulty achieving the performance of MPI (MPI’s much-criticized requirement that the user directly manage data motion ensures that the programmer does in fact manage that memory motion, leading to improved performance). This suggests that other programming models can be productively combined with MPI as long as they complement, rather than replace, MPI.”

Video: Network Architecture Trends

Pavan Balaji from Argonne presented this talk at the Argonne Training Program on Extreme-Scale Computing. “More cores will drive the network, with more sharing of the network infrastructure. The aggregate amount of communication from each node will increase moderately, but will be divided into many smaller messages.”

James Reinders Presents: Vectorization (SIMD) and Scaling (TBB and OpenMP)

James Reinders from Intel presented this talk at the Argonne Training Program on Extreme-Scale Computing. “We need to embrace explicit vectorization in our programming. But, generally use parallelism first (tasks, threads, MPI, etc.).”