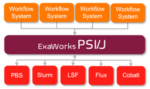

ExaWorks is an Exascale Computing Project (ECP)–funded project that provides access to hardened and tested workflow components through a software development kit (SDK). Developers use this SDK and associated APIs to build and deploy production-grade, exascale-capable workflows on US Department of Energy (DOE) and other computers. The prestigious Gordon Bell Prize competition highlighted the success of the ExaWorks SDK when the Gordewinner and two of three finalists in the 2020 Association for Computing Machinery (ACM) Gordon Bell Special Prize for High Performance Computing–Based COVID-19 Research competition leveraged ExaWorks technologies.

Exascale: Pursuing Clean Energy Catalysts with Aurora

Argonne National Laboratory has announced that researchers are developing exascale software tools to enable the design of new chemicals and chemical processes for clean energy production. Argonne is building one of the nation’s first exascale systems, Aurora. To prepare codes for the architecture and scale of the new supercomputer, 15 research teams are taking part […]

Outgoing Exascale Computing Project Director Doug Kothe Takes on New Role at Sandia Labs

Doug Kothe knows about transitions, including transitions on a grand scale. The outgoing director of the U.S. Department of Energy’s Exascale Computing Project helped transition the supercomputing industry into the exascale era. He took the ECP helm in October 2017, and in May 2022, the project reached a milestone with the certification of the Frontier […]

Power Grid Modeling Tool Launched on Frontier Exascale Supercomputer

Exascale Grid Optimization (ExaGO), a power grid simulation and optimization platform developed by Pacific Northwest National Laboratory (PNNL), is the first of its kind to run on Oak Ridge National Laboratory’s (ORNL) Frontier, the first supercomputer in the world to reach exascale. Frontier, which was launched this spring, can calculate more than 1 quintillion operations per second and […]

LLNL’s Lori Diachin Named Director of Exascale Computing Project

Lawrence Livermore National Laboratory’s Lori Diachin will take over as director of the Department of Energy’s Exascale Computing Project on June 1, “guiding the successful, multi-institutional high-performance computing effort through its final stages,” ECP said in its announcement. Diachin, who is currently the principal deputy associate director for LLNL’s Computing Directorate, has served as ECP’s […]

ASCR: Exascale to Burst Bubbles that Block Carbon Capture

Bubbles could block a promising technology that would separate carbon dioxide from industrial emissions, capturing the greenhouse gas before it contributes to climate change. A team of researchers with backing from the Department of Energy’s Exascale Computing Project (ECP) is out to burst the barrier, using a code that captures the floating blisters and provides insights to deter them. Chemical looping reactors (CLRs) combine fuels such as methane with oxygen from metal oxide particles before combustion. The reaction produces water vapor and carbon dioxide, which can easily be separated to create a pure CO2 stream for sequestration or industrial use. Standard post-combustion separation must pull carbon dioxide from a multigas mixture.

‘Combustion-PELE’ on Frontier: Exascale-Class HPC for Improving Engine Efficiency

“Pele” is the Exascale Computing Project’s application suite for high-fidelity detailed simulations of turbulent combustion in open and confined domains. The suite of C++-based codes comprises several repositories — PeleC (compressible solvers), PeleLM/LMeX (low-mach flow solvers), PelePhysics (thermodynamics, transport, and chemistry models), and PeleMP (multiphysics models) — that integrate block-structured adaptive mesh refinement (AMR) and cut-cell methods to simulate multifaceted combustion processes. Pele simulations provide the detailed physics and geometrical flexibility to evaluate the design and operational characteristics of clean, efficient, next-generation combustion technologies, including advanced ICEs for automotive, industrial, and aviation applications.

Let’s Talk Exascale: ALCF’s Katherine Riley Talks Aurora Deployment, Impactful Science and Partnering with ECP

In this episode of the “Let’s Talk Exascale” podcast, the Exascale Computing Project’s (ECP) Scott Gibson talks with Katherine Riley, director of science at the Argonne Leadership Computing Facility. Her mission is to lead a team of ALCF computational science experts who work with facility users to maximize their use of the facility’s computing resources. […]

Quantum Computing Users Work Alongside Classical Supercomputers: An Interview with Travis Humble at Oak Ridge Lab

As the high-performance computing (HPC) community looks beyond the brink of Moore’s Law for solutions to accelerate future systems, one technology at the forefront is quantum computing, which is amassing billions of dollars of global R&D funding each year. Perhaps it’s no surprise that HPC centers — including the Oak Ridge Leadership Computing Facility (OLCF), home of the world’s first exascale supercomputer, Frontier — are finding ways to leverage

Scientists Using Frontier Supercomputer Win 2022 Gordon Bell Prize, Another Frontier Team Named Prize Finalist

[SPONSORED CONTENT] How many researchers can say they’ve not only run their scientific job on the AMD-powered Frontier supercomputer, the world’s no. 1 ranked HPC system and the first exascale-class machine, but also on Fugaku, Summit and Perlmutter, the world’s second-, fifh- and eighth-ranked HPC systems in the world, respectively? But that’s the case with an interntional group of researchers working on particle-in-cell simulations who have developed code that won….