With help from DOE supercomputers, a USC-led team expands models of the fault system beneath its feet, aiming to predict its outbursts. For their 2020 INCITE work, SCEC scientists and programmers will have access to 500,000 node hours on Argonne’s Theta supercomputer, delivering as much as 11.69 petaflops. “The team is using Theta “mostly for dynamic earthquake ruptures,” Goulet says. “That is using physics-based models to simulate and understand details of the earthquake as it ruptures along a fault, including how the rupture speed and the stress along the fault plane changes.”

NPR Podcast: Scientists Use Supercomputers To Search For Drugs To Combat COVID-19

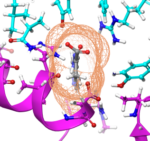

In this segment from the NPR Here and Now program, Joe Palca talks to researchers using ORNL supercomputers to fight COVID-19. “Supercomputers have joined the race to find a drug that might help with COVID-19. Scientists are using computational techniques to see if any drugs already on the shelf might be effective against the disease. The two researchers performed simulations on Summit of more than 8,000 compounds to screen for those that are most likely to bind to the main “spike” protein of the coronavirus, rendering it unable to infect host cells.”

ORNL Enlists #1 Summit Supercomputer to Combat Coronavirus

Researchers at ORNL used the Summit supercomputer to identify 77 small-molecule drug compounds that might warrant further study in the fight against the SARS-CoV-2 coronavirus, which is responsible for the COVID-19 disease outbreak. “The two researchers performed simulations on Summit of more than 8,000 compounds to screen for those that are most likely to bind to the main “spike” protein of the coronavirus, rendering it unable to infect host cells.”

New Leaders Join Exascale Computing Project

The US Department of Energy’s Exascale Computing Project has announced three leadership staff changes within the Hardware and Integration (HI) group. “Over the past several months, ECP’s HI team has been adapting its organizational structure and key personnel to prepare for the next phase of exascale hardware and software integration.”

Argonne Publishes AI for Science Report

Argonne National Lab has published a comprehensive AI for Science Report based on a series of Town Hall meetings held in 2019. Hosted by Argonne, Oak Ridge, and Berkeley National Laboratories, the four town hall meetings were attended by more than 1,000 U.S. scientists and engineers. The goal of the town hall series was to examine scientific opportunities in the areas of artificial intelligence (AI), Big Data, and high-performance computing (HPC) in the next decade, and to capture the big ideas, grand challenges, and next steps to realizing these opportunities.

How the Titan Supercomputer was Recycled

In this special guest feature, Coury Turczyn from ORNL tells the untold story of what happens to high end supercomputers like Titan after they have been decommissioned. “Thankfully, it did not include a trip to the landfill. Instead, Titan was carefully removed, trucked across the country to one of the largest IT asset conversion companies in the world, and disassembled for recycling in compliance with the international Responsible Recycling (R2) Standard. This huge undertaking required diligent planning and execution by ORNL, Cray (a Hewlett Packard Enterprise company), and Regency Technologies.”

MLPerf-HPC Working Group seeks participation

In this special guest feature, Murali Emani from Argonne writes that a team of scientists from DoE labs have formed a working group called MLPerf-HPC to focus on benchmarking machine learning workloads for high performance computing. “As machine learning (ML) is becoming a critical component to help run applications faster, improve throughput and understand the insights from the data generated from simulations, benchmarking ML methods with scientific workloads at scale will be important as we progress towards next generation supercomputers.”

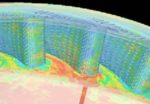

GE Research Leverages World’s Top Supercomputer to Boost Jet Engine Efficiency

GE Research has been awarded access to the world’s #1-ranked supercomputer to discover new ways to optimize the efficiency of jet engines and power generation equipment. Michal Osusky, the project’s leader from GE Research’s Thermosciences group, says access to the supercomputer and support team at OLCF will greatly accelerate learning insights for turbomachinery design improvements that lead to more efficient jet engines and power generation assets, stating, “We’re able to conduct experiments at unprecedented levels of speed, depth and specificity that allow us to perceive previously unobservable phenomena in how complex industrial systems operate. Through these studies, we hope to innovate new designs that enable us to propel the state of the art in turbomachinery efficiency and performance.”

Podcast: Solving Multiphysics Problems at the Exascale Computing Project

In this Let’s Talk Exascale Podcast, Stuart Slattery and Damien Lebrun-Grandie from ORNL describe how they are readying algorithms for next-generation supercomputers at the Department of Energy. “The mathematical library development portfolio of the Software Technology (ST) research focus area of the ECP provides general tools to implement complex algorithms. These algorithms are designed to scale up for supercomputers so that ECP teams can then use them to accelerate the development and improve the performance of science applications on DOE high-performance computing architectures.”

Podcast: Rewriting NWChem for Exascale

In this Let’s Talk Exascale podcast, researchers from the NWChemEx project team describe how they are readying the popular code for Exascale. The NWChemEx team’s most significant success so far has been to scale coupled-cluster calculations to a much larger number of processors. “In NWChem we had the global arrays as a toolkit to be able to build parallel applications.”