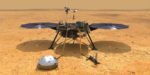

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

The Hyperion-insideHPC Interviews: Rich Brueckner and Doug Ball Talk CFD, Autonomous Mobility and Driving Down HPC Package Sizing

Doug Ball is a leading expert in computational fluid dynamics and aerodynamic engineering, disciplines he became involved with more than 40 years ago. In this interview with the late Rich Brueckner of insideHPC, Ball discusses the increased scale and model complexity that HPC technology has come to handle and, looking to the future, his anticipation […]

Podcast: ColdQuanta Serves Up Some Bose-Einstein Condensate

“ColdQuanta is headed by an old pal of ours Bo Ewald and has just come out of stealth mode into the glaring spotlight of RadioFreeHPC. When you freeze a gas of Bosons at low density to near zero you start to get macroscopic access to microscopic quantum mechanical effects, which is a pretty big deal. With the quantum mechanics start, you can control it, change it, and get computations out of it. The secret sauce for ColdQuanta is served cold, all the way down into the micro-kelvins and kept very locally, which makes it easier to get your condensate.”

DARPA Grant to Foster Practical Quantum Computing with Rigetti

Today Rigetti Computing announced the company has been awarded up to $8.6 million from DARPA to develop a full-stack system with proven quantum advantage for solving real world problems. “We believe strongly in an integrated hardware and software approach, which is why we’re bringing together the scalable Rigetti chip architecture with the algorithm design and optimization techniques pioneered by the NASA-USRA team.”

Google and NASA Achieve Quantum Supremacy

Today Google officially announced that it has achieved a major computing milestone. In partnership with NASA and Oak Ridge National Laboratory, the company has demonstrated the ability to compute in seconds what would take even the largest and most advanced supercomputers thousands of years, achieving a milestone known as quantum supremacy. “Achieving quantum supremacy means we’ve been able to do one thing faster, not everything faster,” said Eleanor Rieffel, co-author on the paper.

ColdQuanta to Accelerate Commercial Deployment of Quantum Atomic Systems

Today ColdQuanta announced it has been awarded $1M from NASA’s Civilian Commercialization Readiness Pilot Program. The program will enable ColdQuanta to develop significantly smaller cold atom systems with a high level of ruggedness. This award expands on the success of ColdQuanta’s Quantum Core technology which was developed with the Jet Propulsion Laboratory and is currently operating aboard the International Space Station.

Podcast: Quantum Supremacy? Yes and No!

In this podcast, the RadioFreeHPC team discusses the Google/NASA paper, titled “Quantum Supremacy Using a Programmable Superconducting Processor”, that was published and then unpublished. “The tantalizing promise of quantum computers is that certain computational tasks might be executed exponentially faster on a quantum processor than on a classical processor. A fundamental challenge is to build a high-fidelity processor capable of running quantum algorithms in an exponentially large computational space.”

Dr. Steven Squyres from Cornell to Keynote SC19

Dr. Steven Squyres from Cornell University will keynote the SC19 conference in Denver. His talk will be entitled “Exploring the Solar System with the Power of Technology.” Steve Squyres’ research focuses on the robotic exploration of planetary surfaces, the history of water on Mars, geophysics and tectonics of icy satellites, tectonics of Venus, planetary gamma-ray and x-ray spectroscopy.

Aitken Supercomputer from HPE to Support NASA Moon Missions

A new HPE supercomputer at NASA’s Ames Research Center will run modeling and simulation workloads for lunar landings. The 3.69 Petaflop “Aitken” system is a custom-designed supercomputer that will support modeling and simulations of entry, descent, and landing (EDL) for the agency’s missions and Artemis program, a mission to land the next humans on the lunar South Pole region by 2024.

Video: Reliving the First Moon Landing with NVIDIA RTX real-time Ray Tracing

In this video, Apollo 11 astronaut Buzz Aldrin looks back at the first moon landing with help from a reenactment powered by NVIDIA RTX GPUs with real-time ray tracing technology. “The result: a beautiful, near-cinematic depiction of one of history’s great moments. That’s thanks to NVIDIA RTX GPUs, which allowed our demo team to create an interactive visualization that incorporates light in the way it actually works, giving the scene uncanny realism.”