Rahul Ramachandran from NASA gave this talk at the HPC User Forum. “NASA’s Earth Science Division (ESD) missions help us to understand our planet’s interconnected systems, from a global scale down to minute processes. ESD delivers the technology, expertise and global observations that help us to map the myriad connections between our planet’s vital processes and the effects of ongoing natural and human-caused changes.”

Job of the Week: HPC Specialist in Software Development at DKRZ

The German Climate Computing Centre (DKRZ) is seeking and HPC and Specialist in Software Development in our Job of the Week. “DKRZ is involved in numerous national and international projects in the field of high-performance computing for climate and weather research. In addition to the direct user support, depending on your interest and ability, you will also be able to participate in this development work.”

Gordon Bell Prize Highlights the Impact of Ai

In this special guest feature from Scientific Computing World, Robert Roe reports on the Gordon Bell Prize finalists for 2018. “The finalist’s research ranges from AI to mixed precision workloads, with some taking advantage of the Tensor Cores available in the latest generation of Nvidia GPUs. This highlights the impact of AI and GPU technologies, which are opening up not only new applications to HPC users but also the opportunity to accelerate mixed precision workloads on large scale HPC systems.”

NOAA and NCAR team up for Weather and Climate Modeling

The United States is making exciting changes to how computer models will be developed in the future to support the nation’s weather and climate forecast system. NOAA and the National Center for Atmospheric Research (NCAR) have joined forces to help the nation’s weather and climate modeling scientists achieve mutual benefits through more strategic collaboration, shared resources and information.

NERSC: Sierra Snowpack Could Drop Significantly By End of Century

A future warmer world will almost certainly feature a decline in fresh water from the Sierra Nevada mountain snowpack. Now a new study by Berkeley Lab shows how the headwater regions of California’s 10 major reservoirs, representing nearly half of the state’s surface storage, found they could see on average a 79 percent drop in peak snowpack water volume by 2100. “What’s more, the study found that peak timing, which has historically been April 1, could move up by as much as four weeks, meaning snow will melt earlier, thus increasing the time lag between when water is available and when it is most in demand.”

NOAA Report: Effects of Persistent Arctic Warming Continue to Mount

NOAA is out with their 2018 Arctic Report Card and the news is not good, folks. Issued annually since 2006, the Arctic Report Card is a timely and peer-reviewed source for clear, reliable and concise environmental information on the current state of different components of the Arctic environmental system relative to historical records. “The Report Card is intended for a wide audience, including scientists, teachers, students, decision-makers and the general public interested in the Arctic environment and science.”

Video: Weather and Climate Modeling at Convection-Resolving Resolution

David Leutwyler from ETH Zurich gave this talk at the 2017 Chaos Communication Congress. “The representation of thunderstorms (deep convection) and rain showers in climate models represents a major challenge, as this process is usually approximated with semi-empirical parameterizations due to the lack of appropriate computational resolution. Climate simulations using kilometer-scale horizontal resolution allow explicitly resolving deep convection and thus allow for an improved representation of the water cycle. We present a set of such simulations covering Europe and global computational domains.”

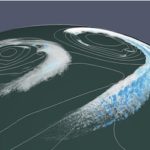

GPUs Power Near-global Climate Simulation at 1 km Resolution

A new peer-reviewed paper is reportedly causing a stir in the climatology community. “The best hope for reducing long-standing global climate model biases, is through increasing the resolution to the kilometer scale. Here we present results from an ultra-high resolution non-hydrostatic climate model for a near-global setup running on the full Piz Daint supercomputer on 4888 GPUs.”

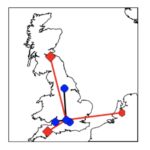

Panasas Upgrades JASMIN Super-Data-Cluster Facility to 20PB

Today Panasas announced that the Science and Technology Facilities Council’s (SFTC) Rutherford Appleton Laboratory (RAL) in the UK has expanded its JASMIN super-data-cluster with an additional 1.6 petabytes of Panasas ActiveStor storage, bringing total storage capacity to 20PB. This expansion required the formation of the largest realm of Panasas storage worldwide, which is managed by a single systems administrator. Thousands of users worldwide find, manipulate and analyze data held on JASMIN, which processes an average of 1-3PB of data every day.

Supercomputers turn the clock back on Storms with “Hindcasting”

Researchers are using supercomputers at LBNL to determine how global climate change has affected the severity of storms and resultant flooding. “The group used the publicly available model, which can be used to forecast future weather, to “hindcast” the conditions that led to the Sept. 9-16, 2013 flooding around Boulder, Colorado.”