“The combination of using both MPI and OpenMP is a topic that has been explored by many developers in order to determine the most optimum solution. Whether to use OpenMP for outer loops and MPI within, or by creating separate MPI processes and using OpenMP within can lead to various levels of performance. In most cases of determining which method will yield the best results will involve a deep understanding of the application, and not just rearranging directives.”

Shared Memory and MPI 3.0

As multi-socket, then multi-core systems have become the standard, the Message Passing Interface (MPI) has become one of the most popular programming models for applications that can run in parallel using many sockets and cores. Shared memory programming interfaces, such as OpenMP, have allowed developers to take advantage of systems that combine many individual servers and shared memory within the server itself. However, two different programming models have been used at the same time. The MPI 3.0 standard allows for a new MPI interprocess shared memory extension (MPI SHM).

Call for Submissions: EuroMPI in Edinburgh

EuroMPI has issued its Call for Submissions. The aim of this conference is to bring together all of the stakeholders involved in developments and applications related to the Message Passing Interface (MPI). As the preeminent meeting for users, developers and researchers to interact and discuss new developments and applications of message-passing parallel computing, the meeting takes place Sept. 25-28 in Edinburgh.

Video: Microsoft Azure for Engineering Analysis and Simulation

Tejas Karmarkar from Microsoft presented this talk at SC15. “Azure provides on-demand compute resources that enable you to run large parallel and batch compute jobs in the cloud. Extend your on-premises HPC cluster to the cloud when you need more capacity, or run work entirely in Azure. Scale easily and take advantage of advanced networking features such as RDMA to run true HPC applications using MPI to get the results you want, when you need them.”

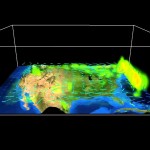

JYU Sets World Record for Pore-scale Flow Simulations

An interdisciplinary research team from JYU in Finland has set a new world record in the field of fluid flow simulations through porous materials. The team, coordinated by Dr. Keijo Mattila from the University of Jyväskylä, used the world’s largest 3D images of a porous material–synthetic X-ray tomography images of the microstructure of Fontainebleau sandstone, and successfully simulated fluid flow through a sample of the size of 1.5 cubic centimeters with a submicron resolution.

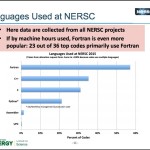

Video: Using OpenMP at NERSC

“This presentation will describe how OpenMP is used at NERSC. NERSC is the primary supercomputing facility for Office of Science in the US Depart of Energy (DOE). Our next production system will be an Intel Xeon Phi Knights Landing (KNL) system, with 60+ cores per node and 4 hardware threads per core. The recommended programming model is hybrid MPI/OpenMP, which also promotes portability across different system architectures.”

Mellanox Showcases Switch-IB 2 on the Road to Exascale at SC15

In this video from SC15, Scot Schultz from Mellanox describes the company’s new Switch-IB 2, the new generation of its InfiniBand switch optimized for High-Performance Computing, Web 2.0, database and cloud data centers, capable of 100Gb/s per port speeds. “Switch-IB 2 is the world’s first smart network switch that offloads MPI operations from the CPU to the network to deliver 10X performance improvements. Switch-IB 2 will enables a performance breakthrough in building the next generation scalable and data intensive data centers, enabling users to gain a competitive advantage.”

Pairwise DNA Optimization using Intel Xeon Phi

The Smith-Waterman algorithm is widely used for pairwise DNA sequence alignment. The computation, consisting of looking for pattern in very long strings of the DNA alphabet, is very demanding. Using the Intel Xeon Phi, tremendous performance gains can be obtained, as long as the algorithms have been modified to take advantage of parallelism.

PGI Accelerator Compilers Add OpenACC Support for x86

“Our goal is to enable HPC developers to easily port applications across all major CPU and accelerator platforms with uniformly high performance using a common source code base,” said Douglas Miles, director of PGI Compilers & Tools at NVIDIA. “This capability will be particularly important in the race towards exascale computing in which there will be a variety of system architectures requiring a more flexible application programming approach.”

WRF Microphysics Optimization

“Microphysics provides atmospheric heat and moisture tendencies. This module has been optimized to take advantage of the Intel Xeon Phi coprocessor. However, some manual optimization can lead to even greater performance gains. By using manual optimizations, the overall speedup on a host CPU (Intel Xeon E5-2670) was 2.8 X, while the performance of running on the Intel Xeon Phi coprocessor was 3.5 X.”