TSMC announced it has signed a preliminary agreement with the U.S. Dept. of Commerce for up to $6.6 billion under the CHIPS and Science Act. TSMC also announced plans to build a third fab at its TSMC Arizona site in Phoenix using 2nm or more processes….

TSMC in the USA: Up to $6.6B in CHIPS Act Funding for 3rd (2nm or More) Arizona Fab

HPC News Bytes 20240408: Chips Ahoy! …and Quantum Error Rate Progress

A good April morning to you! Chips dominate the HPC-AI news landscape, which has become something of an industry commonplace of late, including: TSMC’s Arizona fab on schedule, the Dutch government makes a pitch to ASML, Intel foundry business’s losses, TSMC expands CoWoS capacity, SK hynix to investing in Indiana and Purdue, Quantinuum and Microsoft report 14,000 error-free instances

Classiq and Quantum Intelligence Partner on Drug Development

SEOUL, SOUTH KOREA and TEL AVIV, ISRAEL | April 04, 2024 — Quantum computing software company Classiq and Quantum Intelligence Corp. today announced the launch of joint research for drug development by applying quantum computing to pharmacology. The collaboration is under the auspices of Classiq’s Quantum Computing For Life Sciences & Healthcare Center, launched with NVIDIA […]

SK hynix to Invest $3.9B in Indiana HBM Fab and R&D with Purdue

Memory chip company SK hynix announced it will invest $3.87 billion in West Lafayette, Indiana to build an advanced packaging fabrication and R&D facility for AI products. The project, which the company said is the first of its kind in the U.S., will be an advanced….

HPC News Bytes 20240401: A $100B AI Data Center, Eviden Says It’s Healthy, Alibaba’s RISC-V Chip, New Optical Interconnect Group, Nvidia Fights CUDA Translation

Happy April Fool’s Day! It was as always an interesting week in the world of HPC-AI, this edition of HPC News Bytes includes commentary on: Microsoft and….

Asmeret Asefaw Berhe Issues Letter of Farewell as Director of DOE Office of Science

Following the news last week of her departure as director of the U.S. Department of Energy’s Office of Science since May 2022, Dr. Asmeret Asefaw Berhe has issued a letter of farewell to her agency and White House colleagues. Dr. Berhe is currently on leave from the….

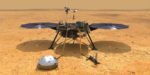

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

ALCF Announces Online AI Testbed Training Workshops

The Argonne Leadership Computing Facility has announced a series of training workshops to introduce users to the novel AI accelerators deployed at the ALCF AI Testbed. The four individual workshops will introduce participants to the architecture and software of the SambaNova DataScale SN30, the Cerebras CS-2 system, the Graphcore Bow Pod system, and the GroqRack […]

EuroHPC JU Energy Efficiency Project REGALE Comes to End

Munich, 26 March 2024 – This March marks the end of REGALE, a European project, funded by the EuroHPC Joint Undertaking, which has carried out research into the development of new software for high performance computing (HPC) centres with a focus on energy efficiency. After three years of research, the project now provides a toolchain that […]

@HPCpodcast: Matt Sieger of OLCF-6 on the Post-Exascale ‘Discovery’ Vision

What does a supercomputer center do when it’s operating two systems among the TOP-10 most powerful in the world — one of them the first system to cross the exascale milestone? It starts planning its successor. The center is Oak Ridge National Lab, a U.S. Department….